Time and time again I have read articles from agencies and practitioners attempting to define a sound analytics process. In my posts about the definition of analytics and the use of a RACI matrix to define roles and responsibilities, I alluded to Lean Six Sigma (LSS for short). Daniel Waisberg also wrote an excellent article about the Web Analytics Process back in 2011. So why, you may ask, why talk about the analytics process again?

Firstly, repetition is the first principle of learning. Secondly, our research on organizational analytics maturity clearly reveals the analytics process and continuous improvement methodology is the weakest of six areas outlined in the Online Analytics Maturity Model. You can do your own self-assessment to find out how you score and how you rank against the benchmark.

What Is Good For Them Must Be Good For Us

The concepts of Lean and Six Sigma stems from the Motorola and Toyota manufacturing world of the late ’70s. With pressure for quality and speed, a qualitative method to reduce waste and defects was required. Supported by GE Capital, Caterpillar and Lockheed Martin, the simple concepts gained immense attention and proved successful for many organizations – even the US Army adopted LSS.

Six Sigma focuses on improving quality through defects elimination; Lean streamlines processes through elimination of waste.

The beauty of LSS is that it has survived the test of fire through years of use in diversified environments and organizations of all sizes. Furthermore, LSS is well documented in articles, books, classes and certification.

Both Lean and Six Sigma have been criticized for their almost religious-like approach of “Black Belts” super-gurus and an over-reliance on methods and tools that can lead to analysis-paralysis and kills creativity. Any methodology or model has its caveats, and as analysts, you should be able to exercise judgment in what makes sense for you. The benefits of LSS far outweigh the inconveniences.

Lean Six Sigma Precepts

The goal for digital analytics isn’t necessarily to become Six Sigma Black Belts. The precepts of Lean Six Sigma are simple:

- User-centric: Recognize opportunities and eliminate defects as defined and perceived by your customers;

- Consistent quality: Variation in processes hinders your ability to deliver consistent high quality services;

- Data-driven culture: Foster a culture of effectiveness and objective-driven management;

- Velocity: Maximizing process velocity reduces time between user goal and conversion, between problem identification and resolution, or from opportunity identification through capturing it;

- Value: Separate value-add vs. non-value-add to eliminate causes of waste;

- Tools & framework: Leverage quality tools in a framework suited for effective problem solving and data-driven decisions;

- Quantify complexity: Provides the mean to quantify and eliminate the cost of complexity.

DMAIC: The Lean Six Sigma Mantra

Like in fairy tales, repeat DMAIC three times and the magic will start to happen. DMAIC is an acronym for Define-Measure-Analyze-Improve-Control – a continuous improvement process with clearly defined steps. In general, it’s better to evolve processes rather than change radically. The concept of “velocity” also means this 5-step approach becomes a tight and efficient way to continuously deliver small, incremental improvements.

Define

State the hypothesis or problem to be solved, define what is expected and what success would look like. At this early stage, you can already attempt to understand the actual process (use BPMN modeling notation), the scope of work and the relationship with other aspects of the business, identify what is critical to quality (as perceived by users) and who will be involved (refer back to my RACI article). At this stage, you will want to define a SMART objective – and believe me, don’t despise this important point. Despite my experience, defining a really good SMART objective that everyone can endorse is not an easy task but remains essential. After all, as Jim Sterne likes to say, “How you measure success depends how you define success”.

Measure

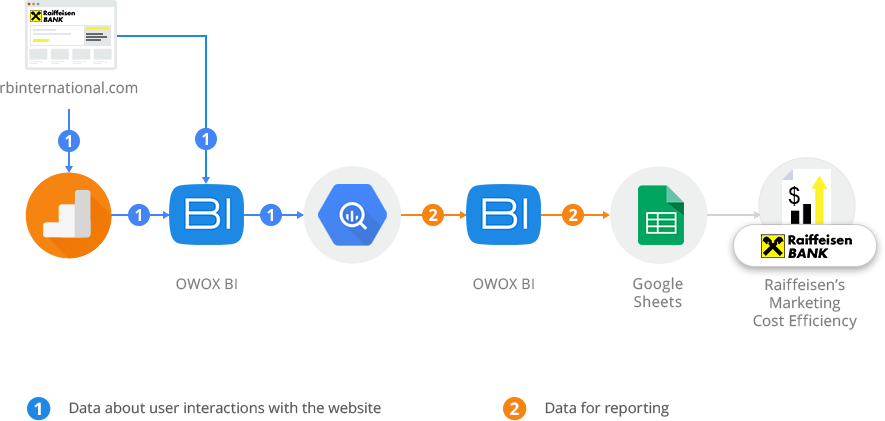

Combining data with knowledge and experience is what separates true improvement from mere process tinkering. Identify data sources and if you lack the right data for the task (think “multiplicity”!); conduct audits to ensure data quality. You can do preliminary, simple analysis on the data. By the end of this step the problem (or opportunity) should become clearer and understood by all stakeholders.

Analyze

By the end of this step, the problem (or opportunity) will be clearly identified. Business requirements and how they will be answered are understood. Functional requirements, constraints and impact on processes and resources are known. You should be able to map this initiative on the overall architectural landscape of personas > goals & activities > outcomes. Don’t forget that you might identify new metrics requirements, or there might be impacts on KPIs and dashboards.

Improve

At this step, we want to show that we can solve the problem (or capture the opportunity). The success criteria are revisited & confirmed. Risks are uncovered and mitigation factors are elaborated. Here, you might conduct an A/B or MVT test to confirm your approach. Oh! Don’t forget about change management, training and communicating the good news!

Control

To quote Jim Sterne again, “It’s not about getting the right answer, it’s about asking the right questions and providing insight”. The Control step revisits the outcomes of the project and measure actual performance against stated objectives. You want to keep an eye on your changes and get ready to enter the improvement cycle again.

Your assignment!

If analytics is how an organization arrives at an optimal and realistic decision through analysis and data; then, analysis is the process of breaking something complex into smaller parts so we can understand how it works – and optimize it.

Be nimble about Lean Six Sigma – to get started, just remember the DMAIC high-level approach and create a document with the five steps outlined. For each step, think of the following points:

- Define: What’s the objective? Can you state it as a SMART one? Who will be involved or impacted? What is in or out of scope?

- Measure: What are the data sources? Anything missing? Is it good quality data? Can you gain initial insight from this data without too much effort?

- Analyze: What are the correlations and patterns? This is where your analysis skills are truly put to work!

- Improve: Be creative, think about various solutions that balance the optimal/best solution vs. what is realistic given the constraints.

- Control: It’s not over until you can report the impact of your work –be humble and remember improvement rarely come from a sudden 100% increase in something, but from small improvements to dozens of little things.

I’ve had people telling me “yeah, Stephane, this is nice in theory and in class rooms, but doesn’t work in the real world…” This usually comes from people who actually don’t have any methodology or alternative to suggest. I put you to the challenge: do it and let me know how it goes!