This April, Google announced Content Experiments for mobile apps, managed through the Google Tag Manager (GTM) platform. This is great news for any app developer as it allows for faster and more reliable iteration and optimization of app usage in ways that have not been possible in the past.

I previously wrote an introductory post about Google Tag Manager for mobile apps, do check it out if you are looking for an introduction to the product and how it works.

Apps usually have several configuration values hard coded: they determine how the app behaves, what and how content is displayed to users, various settings, and so on. Many challenges app developers face arise when these configurations need to be changed or adapted in some way. Unlike websites, where we can iterate and continuously change such values relatively quick, mobile apps are by nature frozen once published. If we want to change anything after that point, that inevitably involves shipping a new binary to the app marketplace and ultimately hope for the best when it comes to user adoption in the shape of app updates.

This is one of the challenges that Google Tag Manager seeks to address, with the end goal of moving away from a world of constants to dynamism, from static to highly configurable apps.

While GTM has been on the market for a while now, the ability to perform content experiments for mobile apps is a new and revolutionary feature of the product. In line with the agenda for dynamism, this enables app developers to change configurations values at runtime to, for example, try different variations of configurations through A/B – and multivariate tests. The appeal here should be obvious: we are no longer limited by traditional development cycles, which can be both lengthy and costly, as soon as we want to try something new.

This opens up for testing and analysis relating to those important business questions we likely ask ourselves about our apps on a daily basis:

- How often should x, y, or z be promoted to the user base?

- What messages are most successful in incentivizing desirable behavior within our app? What is the best wording?

- Is content variation a or b most effective in driving in-app revenue?

- What are the best settings to use for a given user segment?

The short answer is that we do not know, until we test each one of them and perform careful analysis of the outcome. And once we are able to answer these questions with statistical significance, we can also easily tie it all back to those other key metrics we are interested in terms of engagement, monetization, interactions, etc.

As always with Google Analytics, at our disposal are the full power of segmentation that is captured both out-of-the box (demographics, technology, behavioral patterns) as well as through our own custom implementations.

Experimenting With Selling Points

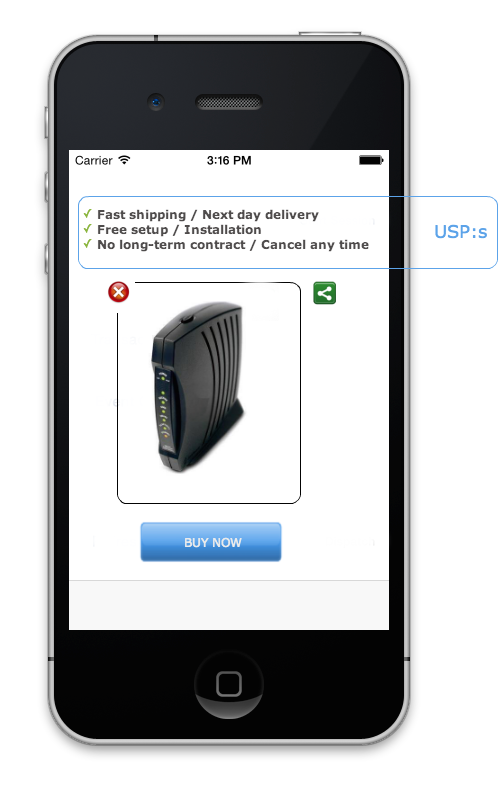

In this post, we will be implementing Google Tag Manager content experiments in a mobile app which has a number of USPs (Unique Selling Points) displayed in an effort to drive monetization, as shown below.

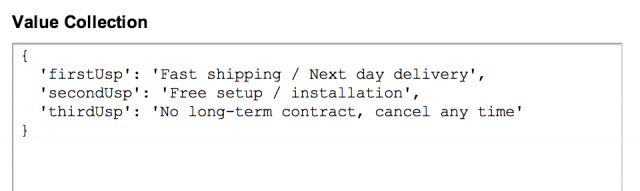

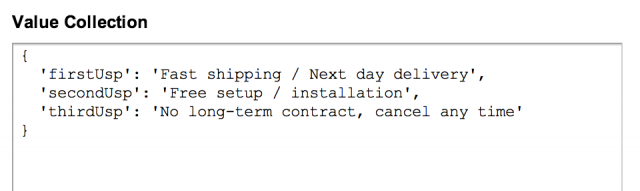

These USPs are actually pulled directly from a value collection macro in Google Tag Manager, where we can easily update them if needed directly from the web-interface (fantastic!):

Assume we wanted to change these USPs for whatever reason. Maybe the marketing department has concluded that shorter USPs is the way to go nowadays, and user surveys are in line with the assumption. Despite such indicators, this is clearly not a decision that should be made lightly and without data to back it up.

USPs are an essential part of the value proposition we present to get prospects to buy our product instead of someone else’s. It is part of our unique differentiation. In fact, the smallest change to the phrasing of our USPs can have critical impact on our conversion rate and hence our entire business. The copy needs to be just right in terms of highlighting benefits, in grabbing a prospect’s attention, and so on. Enter the data scientist. Instead of simply changing our USPs to a shorter, snappier version, we should perform an experiment to see if the marketing department is on the right track before we make a permanent change. In this case, we will test our original copy against the below, shorter version.

- Fast shipping

- Free setup

- No long-term contract

As you can tell, the difference here is in the amount of detail we include. Besides adhering to marketing’s will, testing something like this will take us further in understanding what messages our users respond best to and can provide valuable insights to be used in many business analyses to come.

In this example, we assume that we have already implemented GTM in our app following these instructions, and that the values of current USPs are being fetched from our container (at the moment, there is only one version of these USPs, the longer one).

Let’s get started!

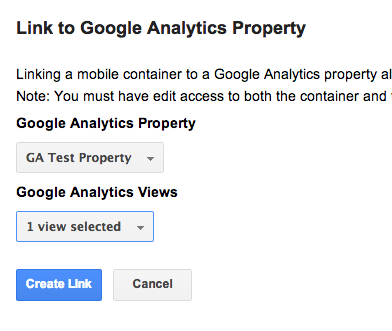

Step 1: Link the Container to Google Analytics

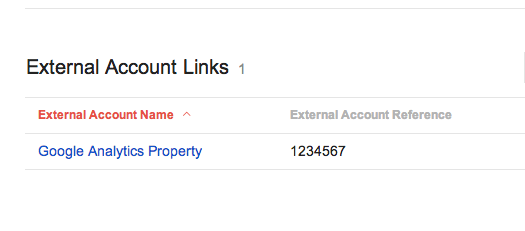

The first thing we need to do is to link our Google Tag Manager container to a Google Analytics property. Here, we also select in which Views the experiment data should be surfaced. Note that we need to have edit access to both the container and the property to do so.

Once we have linked a property to our container, it will be visible under External Account Links in the Google Tag Manager interface.

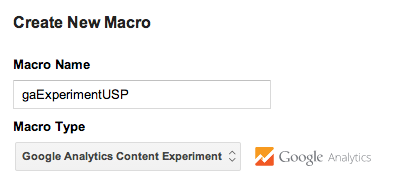

Step 2: Create Experiment Macro

Perhaps somewhat surprising, the way we proceed in configuring our experiment is to create a new macro in Google Tag Manager. This makes sense, however, when we consider that what we want to test is ultimately different configuration values, and in Google Tag Manager values are always stored in macros.

Step 3: Configure Values

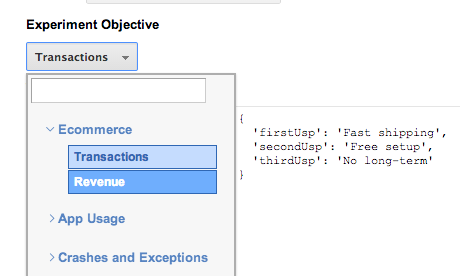

The next step is to define our USPs in this new macro. It takes on JSON-formatted name-value pairs as demonstrated below. In our original, we have our long USPs . In Variation 1, the shorter ones. You can create up to 10 variations here.

Note that before we introduced this new content experiment macro in our container, we simply stored our name-value pairs for the USPs in a standard value collection macro. This macro type only accepts one variation set, and should now be deleted as we instead have a content experiment macro defining the USPs from now on.

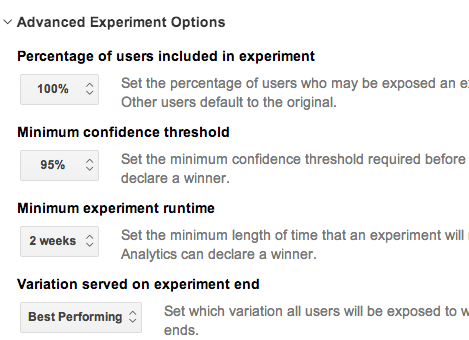

Step 4: Advanced Options

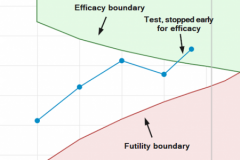

For the purpose of this demonstration, we will not change from the defaults here, which are displayed below. We will expose 100% of our users to the experiment, set the confidence interval to 95%, run the experiment for two weeks, and once we have a winning version we will serve that to all our users going forward.

Step 5: Select an Objective

We need to pick an objective with our experiment so that the system knows what to test against. This can be connected to our in-app monetization efforts, user behavior, or system behavior such as exceptions (obviously, when selecting exceptions as the objective, the system will classify a lower rate as better).

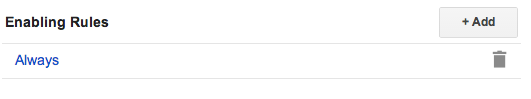

Step 6: Enabling Rules

Similar to how tags have rules, for content experiment macros we need to decide under which conditions it will run (besides how many users will be exposed). We could, for example, decide to only run our experiment on app users of a particular language. In this case, we will simply set the rule to “Always”, including every user in our experiment.

Step 7: Download And Add Default Container

If this is the first time we ship our app with Google Tag Manager in it, we should add a default container to our project under /assets/tagmanager (again, for an introduction of how to implement GTM in apps, please have a look at this article). For now, we assume we were already calling these USP-values from Google Tag Manager, and simply need to publish a new container with our new content experiment macro in it.

Step 8: Publish

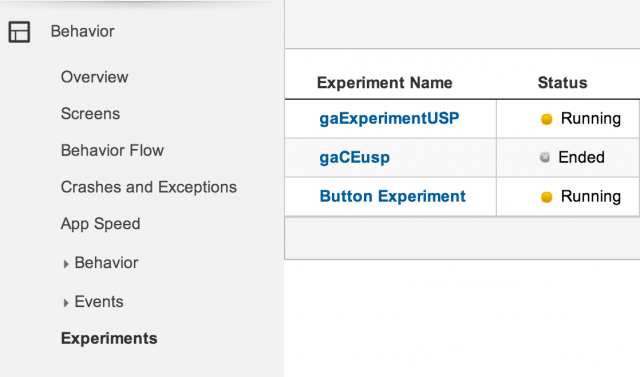

Finally, we need to publish this latest version of our container. This will start our USP-experiment and create a new report for it in the Google Analytics interface as shown below.

Start Analysing

And we’re up and running! We will be able to monitor the results of our experiment directly in the Google Analytics interface as it progresses.

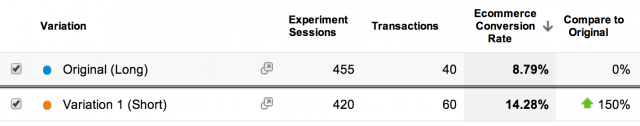

In the end, it turned out our shorter versions of USPs outperformed the longer ones, indicating that marketing was in fact correct in its assumption and that we should move over to this copy permanently.

This, of course, leads to a plentitude of additional questions that we should now ask ourselves about how we work with persuasion within our apps, and it brings us one step closer in being able to understand and model user behavior. One thing is certain: had we simply assumed that “less is more” and implemented a new copy straight away, we might never have been able to back up the assumption with data. We would never have been able to tell whether the shorter version performed better because of externalities we were not aware of, because we never tested it against the longer version simultaneously. By performing the experiment, we empowered ourselves to derive the final decision from data and statistics.

Final Thoughts

Content experiments for mobile apps can be extremely valuable to a business. We are no longer dependent on long development cycles as soon as we want to test something new. And when we do want to test, we can do so faster, more accurately, and with more certainty to avoid costly mistakes based on hunches.

What are your thoughts about content experiments for mobile apps? We would love to hear your inputs!