Building a Culture of Optimization is a very important topic and something our industry as a whole is still in the process of nailing down – most organizations I see haven’t yet reach maturity here. I’ve presented at several conferences, webinars, and meetings recently on the topic, and given the importance of building such a culture I am sharing in this article some of the most important things I see, do, and recommend. This article focuses on all the steps you will need in order to shift your organizational culture towards optimization and data-driven decision making in general; the main steps are: education, process, and advocacy. Here is a quick summary of what you will learn:

- Culture Shift is an ongoing process…

- It doesn’t happen overnight, but can start with 1 big win

- Bring everyone along: Marketers, Sales, Devs, Eng, Execs

- Reach across groups / teams for test ideation & input

- Consider a ‘roadshow’ of big wins to different groups/stakeholders

- Have ‘one source of truth’ for your organization to reference for everything test related

Part 1: The Basics – Educate Educate Educate!

Educating your teammates is the first step in building a culture of optimization within your organization. Below are 5 basic principles & tips I generally cover with my teams early on when we talk about testing. Laying down these basics is important before you get into test design and execution.

1. What is Optimization?

Optimization is the ongoing, data driven process of continually discovering and quantifying the most effective experience, both online and offline, for your consumers and customers. Testing and testing platforms coupled with processes, enable organizations to adopt a culture of optimization.

2. What is a test?

There is a big difference between a true test and a time series test. Many people mistakenly think they are testing when they launch a new website and report on the impact by doing a before & after analysis. To be a true test, you must have a control and a variant within the same time period, while holding all other variables constant (note – this is one of the biggest mistakes I see, everyone thinks they are running a test, when all they are really doing is a time-series analysis).

3. Testing vs. Analysis

Which is appropriate depends on the question you are asking or the hypothesis you have. For example:

- Which layout works better? … test

- What content do people look at more? … analysis

While they are different things, testing & analysis go hand in hand. You should analyze your data set to find the opportunities to test, then test, then analyze the test results for insights and further test ideas. It’s a nice little circle.

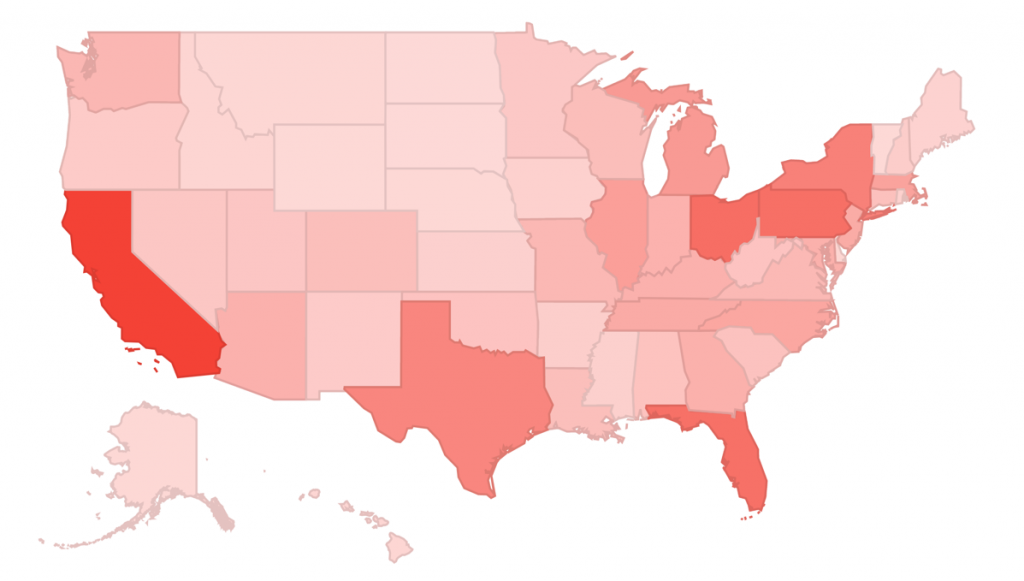

4. Know your limits: Traffic

We’d all love to run 100s of tests at a time, but we only have so much traffic. The number of concurrent tests in market depends on traffic to each site. For example, for a previous program I managed, most tests ran for US English on ~5% of website traffic per variation, whereas most regional tests (Brazil, Russia, India, etc) ran on ~25% or more of traffic per variation because in these countries we got a lot less website traffic.

5. Given your traffic – is testing worth the effort?

Normally, I would say you should test everything. Launching a new website? Test it. Want to change to the main CTA? Test it. New headline on your homepage? Test it. BUT you will definitely get requests to run a pretty vanilla test on a low traffic website. For example, changing the subtext or H2 of a campaign landing page that gets 500 visits per week. Is it worth your time to test it? Maybe. But before you go to all the effort of designing a test, developing the assets, executing & analyzing, be sure that this is something you absolutely need to test, because very likely, this test will need to run for a long time to reach statistically significant volumes, and even then may not reach confidence if the changes are too similar to the control. It’s hard to say no to a test idea because the page doesn’t get enough traffic. It’s especially hard to say no when you’ve worked so hard to convince people to think about testing first. But knowing when it’s appropriate to test, and when you should just launch is an important step in the maturity cycle for your testing program.

So that’s the basics! Have you tried these out with your organization?

Part 2: Good Test Designs

You can’t have a good test without a good test design. One of the first things I do when a new test idea surfaces is to sit down with the key stakeholders & test proposers to understand the details of what they’d like to test. We’ll talk through the variables that are going to be tested, how best to setup & design the test, and ensure we are on the same page in terms of potential test outcomes and how to ensure we are testing in a clean and consistent manner. To start, share the knowledge in terms of what type of test is appropriate to meet the need.

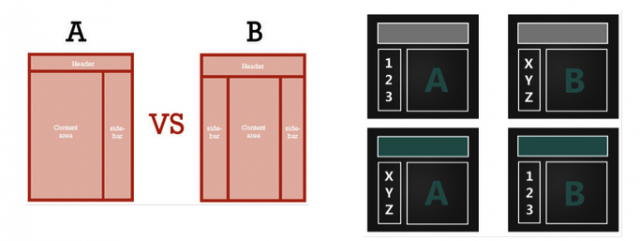

A/B test vs Multivariate test

- A/B/n test: compares two (or more) different versions

- Multivariate test (MVT): compares variations of multiple elements in one test

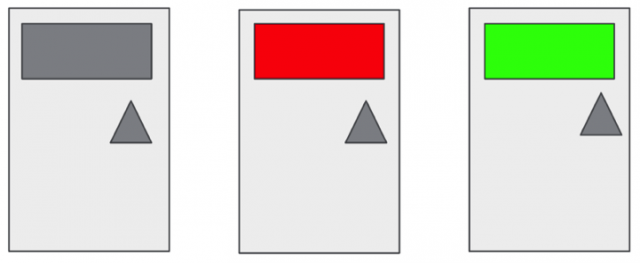

In the example below, the A/B test on the left is looking at a 2 column vs 3 column layout while keeping all other variables constant. The MVT test on the right is looking at 2 different elements in combination – which color banner works best and whether numbers or letters on the side column perform better.  Next, ensure your team understands the basic test inputs: what makes a control versus a test variation.

Next, ensure your team understands the basic test inputs: what makes a control versus a test variation.

- Control: the current state of your website – no changes, should be the same as what is live to your users during the time of the test.

- Variable: the element on the site you want to change. For example, button color would be one variable.

- Variation: each test variation should change an isolated number of test variables. For example, test variation 1 could have a blue button while the control has a green button. Everything else should remain constant so you can isolate the impact of the one variable you are testing.

When designing a test I always recommend having a static control rather than relying on the untested portion of your traffic for comparison. This is important for a couple of reasons:

- You’ll be able to easily compare numbers because each variation & control will get the same percentage of traffic.

- You’ll be able to control (and share evenly) the impact of outside sources on your test variations & control variation.

Trust me on this one rather than learn the hard way (I learned the hard way… wasn’t pretty trying to explain to senior stakeholders why I had to throw out the results of what they thought was a perfectly fine test).

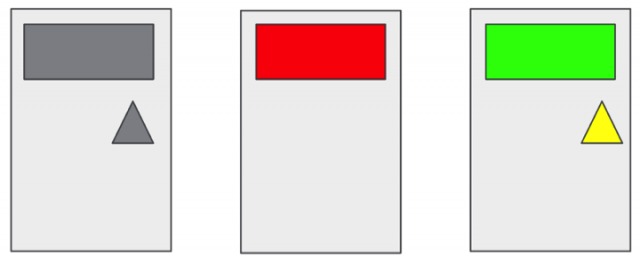

Good vs. bad test designs

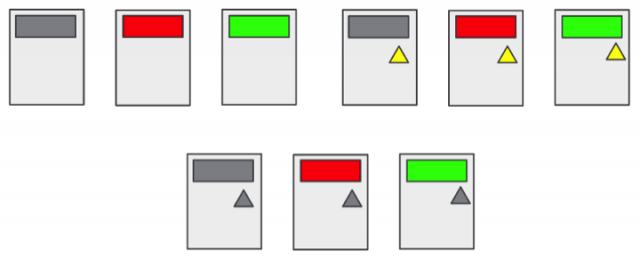

Bad test design  Why is this a bad test design? Too many variables are being tested: This test mixes banner color, placement of a button, and button color. Here’s a couple of better test designs for these same variables: Good A/B/n test design 1

Why is this a bad test design? Too many variables are being tested: This test mixes banner color, placement of a button, and button color. Here’s a couple of better test designs for these same variables: Good A/B/n test design 1  Good A/B/n test design 2

Good A/B/n test design 2  Good A/B/n test design 3

Good A/B/n test design 3  You could also test this as one massive A/B/n test or use some stats and a matrix calculator to come up with the necessary variations for a Multivariate test. Good Multivariate test design

You could also test this as one massive A/B/n test or use some stats and a matrix calculator to come up with the necessary variations for a Multivariate test. Good Multivariate test design

So why is this all important?

Simple – without a good test design you will not be able to report (with confidence) that what you are testing is any better or worse than what you currently have. Further, if you were to implement the results of a test with a bad design (one that did not control for variables) you may find very different results than what you anticipated due to there being too many variables at play. Finally, if you do find a large win with a bad test design (ex. one with too many variables) you may not be able to tease out what variable truly caused the positive impact. This could impact your ability to further optimize these variables down the road.

That’s it, here is a summary of good test design in a few simple steps:

- Choose the right kind of test, A/B/n vs MVT

- Create test variations to isolate the elements you want to test

- Ensure you have a control and variations with a controlled number of variables

Part 3: Know the Math

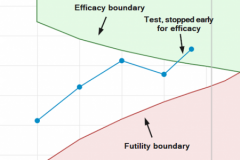

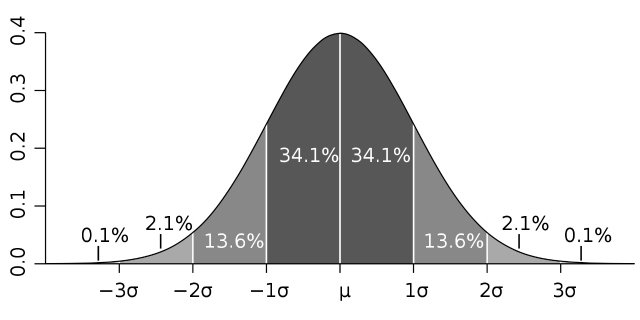

What does someone running an A/B or MVT test need to know about math? How detailed should they be? Here is what I tell all of my coworkers. Statistical Confidence = confidence in a repeated result The confidence level, or statistical significance indicate how likely it is that a test experience’s success was not due to chance. A higher confidence indicates that:

- The experience is performing significantly different from the control

- The experience performance is not just due to noise

- If you ran this test again, it is likely you would see similar results

Confidence interval = a range within the true value that can be found at a given confidence level Example: A test experience’s conversion rate lift is 10%, it’s confidence level is 95% and it’s confidence interval is 5% to 15%. If you ran this test multiple times, 95% of the time, the conversion rate would fall between 5% to 15% What impacts confidence interval?

- Sample size – as a sample grows the interval will shrink or narrow

- Standard deviation or consistency – similar performance over time reduces standard deviation

In the diagram below: While the range may seem wide, the vast majority (68.2%) of results will be centered around the mean of 10%  That’s it. That’s all people need to know. In reality though, it’s not enough because it doesn’t go into details of how to calculate statistical confidence, but there are plenty of online tools to help with that. I like to give people a spreadsheet with a confidence calculator built in, so all they have to do is plug in their numbers. The real takeaway here, however, is that statistical confidence is important. I don’t actually care if my marketers know how to calculate it or even care to. All I really need them to know is that there is something mathematical that makes a difference for tests and that they should ask about it. In fact, after I gave a recent presentation on this topic, one of the attendees said he’ll forget what this stuff means by the next day, but he’ll remember he should ask me about it. Mission accomplished.

That’s it. That’s all people need to know. In reality though, it’s not enough because it doesn’t go into details of how to calculate statistical confidence, but there are plenty of online tools to help with that. I like to give people a spreadsheet with a confidence calculator built in, so all they have to do is plug in their numbers. The real takeaway here, however, is that statistical confidence is important. I don’t actually care if my marketers know how to calculate it or even care to. All I really need them to know is that there is something mathematical that makes a difference for tests and that they should ask about it. In fact, after I gave a recent presentation on this topic, one of the attendees said he’ll forget what this stuff means by the next day, but he’ll remember he should ask me about it. Mission accomplished.

Part 4: Evangelize the Process

Process is important. Process leads to consistency, repeatability, and authority in a testing program. Sharing that process and getting others in your organization bought in and supportive is even more important.

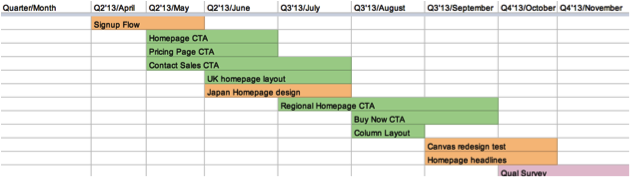

One source of truth

One of the best ways to make your optimization program better known within your organization is to evangelize it via a widely accessible & visible roadmap. Here’s an example roadmap that I’ve used in the past.  I host this roadmap in a Google doc that is accessible to everyone in my organization, from analysts to executives. This doc, however, is much more than just a roadmap. It also houses tabs that track all test variations with their descriptions, hypothesis, business justifications, launch dates, test plans, and results decks. Further, it has a tab for inputting new test ideas. Essentially, it’s a one stop shop for education, ideas, and answers. Having this master test doc or roadmap easily accessible helps with process because it gives everyone a single place to go to for all the resources they might need when formulating, suggesting, or analyzing a test. From a management standpoint, it’s also really useful to be able to point everyone to one source of truth and doing so solidifies the consistency and repeatability aspects of a process.

I host this roadmap in a Google doc that is accessible to everyone in my organization, from analysts to executives. This doc, however, is much more than just a roadmap. It also houses tabs that track all test variations with their descriptions, hypothesis, business justifications, launch dates, test plans, and results decks. Further, it has a tab for inputting new test ideas. Essentially, it’s a one stop shop for education, ideas, and answers. Having this master test doc or roadmap easily accessible helps with process because it gives everyone a single place to go to for all the resources they might need when formulating, suggesting, or analyzing a test. From a management standpoint, it’s also really useful to be able to point everyone to one source of truth and doing so solidifies the consistency and repeatability aspects of a process.

Different perspectives matter

The final point I will put forth about evangelizing your program is to bring everyone along. Several of the most impactful and significant tests I’ve run have been ideas sourced across the greater organization – from engineers, developers, and salespeople. Of course we also get a lot of really great input from our marketing and analytics teammates, but the variety of perspectives and viewpoints from the greater org is a huge asset that is important to tap into. Including a wider group into your test ideation and execution also helps solidify relationships across groups and leads to more productive projects down the road.

Part 5: So You’ve Found a Big Win… Now What?

Ensure you’ve double triple checked your results! Are they statistically confident? Did you control for external variables? Why is this important? A personal example… I ran a test where we found significant uplift over our control from a couple of test variations, but one version stood out as the clear winner. After closing the test, reviewing and analyzing the data, I communicated the results and recommendation to launch the winner to the rest of my organization. Most people were very excited. But given I work in a data hungry organization, I had an engineer who questioned my findings. After he did a bit of digging into the logs, he noticed there was an exceptionally high amount of traffic from the Philippines going to the winning variation. When we went back and filtered out all non-US data we actually had a different winner (thankfully still a good story). Oops! The test was supposed to be English/US only, so we hadn’t gone the extra mile to be sure to exclude other geos. Lesson learned – I had to go back to my organization and let everyone know that I had been mistaken, and we in fact had a very different winner for this test. Safe to say I only made that mistake once!

Next – Make a deck!

Your deck should include the following:

- Executive summary

- Test design: traffic %, confidence, time period, variation screenshots, description of what you did (KISS – keep it simple, stupid!)

- Results

- Recommendation & Next Steps

Then… get it in front of all relevant stakeholders. Schedule a review meeting, send a wrap up email, communicate findings and changes to your broader organization.

Keep your results in a widely accessible place

In my spreadsheet (mentioned above), I keep all test designs and results in the roadmap tab so that everyone in the organization has a single place to go for info on current and past tests.

To wrap up this article, Culture Shift is an ongoing process

- It doesn’t happen overnight… but can start with 1 big win

- Keep the momentum

- Bring everyone along: Marketers, Sales, Devs, Eng, Execs

- Reach across groups/teams for test ideation & input

- Consider a ‘roadshow’ of big wins to different groups/stakeholders

- Have ‘one source of truth’ for your organization to reference for everything test related

There you have it, follow these steps and you’ll be well on your way to educating your organization on the benefits of and methods for testing & optimizing your web properties and soon you should also start to see a changing tide within your group.