What do your numbers mean? To objectively measure them they need to be highly specific, but in order to understand them we need to use more general language. Be it unemployment rates or web analytics, statistics often include, exclude and re-label individuals’ behavior, and as such understanding what people are doing means paying attention to exactly what you are measuring.

What do your numbers mean? To objectively measure them they need to be highly specific, but in order to understand them we need to use more general language. Be it unemployment rates or web analytics, statistics often include, exclude and re-label individuals’ behavior, and as such understanding what people are doing means paying attention to exactly what you are measuring.

Lebergott, The New Deal, and Unemployment

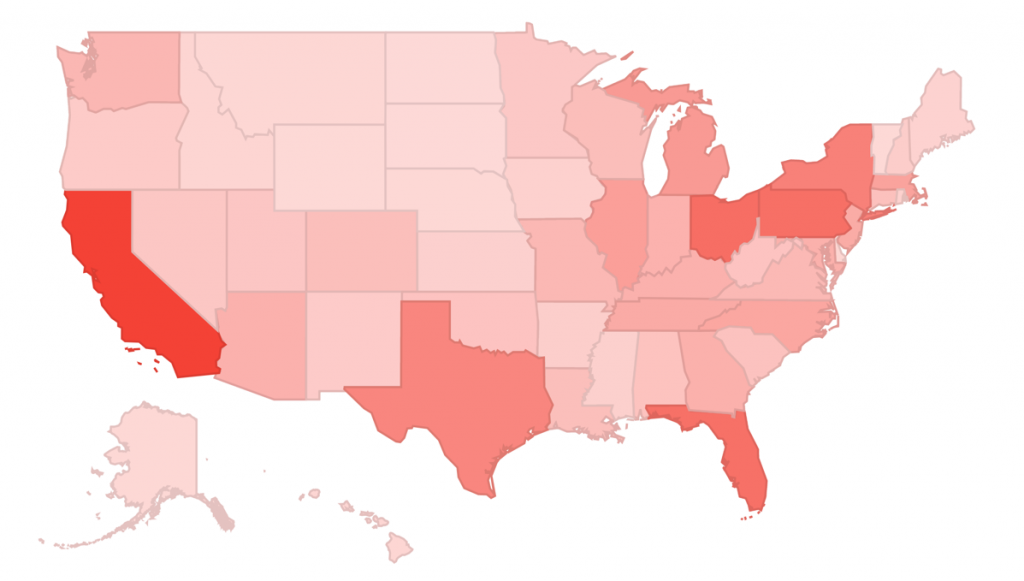

In 1940 between 9.5% and 14.6% of the USA was unemployed, depending on how unemployment was calculated (see source [pdf]). Data collected by Stanley Lebergott – working for the Bureau of Labor Statistics (BLS) – painted a poor picture of the economy from 1929 to 1933. Despite the efforts of the Roosevelt administration, unemployment barely slowed. It was as if The New Deal did nothing at all. Then in 1976, Michael Darby looked back at employment for the same period and, like any good analyst, saw something hidden in the numbers.

Darby noticed that Lebergott counted people employed under government work programs as unemployed, despite the fact that people earning from these jobs collected pay cheques and spent as employed people do. From a modern economic perspective we would count these people as employed. Lebergott did not.

People criticize Lebergott for this, however I think this is unfair. Lebergott was gathering data according to the needs of his project. At the time, comparative metrics on number of jobs was an important factor in establishing growth of the job market, and emergency work program jobs would have improperly biased the interpretation of results (at least in the short term). By ignoring work program jobs, Lebergott was enabling a more direct comparison of the number of “regular” jobs, the comparison that best suited the BLS’s purposes. However, as economics as a field grew and the understanding of market changes evolved, the specificity of his metric unduly biased the work of those who did not pay enough attention to what the data actually meant. The result has been bad analysis of the great depression.

This is not a problem unique to Lebergott’s situation. People used Lebergott’s data without thinking about the specifics of his numbers. They assumed definitions for the metrics they were using. They ignored the specifics and, in so doing, they produced bad data.

Understanding Your Numbers

It’s not uncommon for analysts to find themselves in the same situation as post-Lebergott economists. Many metrics are – like Lebergott’s unemployment rate – designed to measure particular behaviors within specific contexts. This can lead to strange interpretations in less common situations, even with metrics as simple as time on page.

Bounces and Time on Page

Despite its name, Time on Page does not track the amount of time that the visitor has been on a page, but rather the interval between requests for the Web Analytics tag. This makes for a similar, but not identical metric.

For instance, imagine someone comes to a site with Google Analytics installed. When s/he loads the page, javascript executes the analytics tracking code, writing a time stamp of ‘x’. S/he reads for 5 seconds, then loads a new page. The tracking code executes on the new page load and writes a time stamp of ‘x+5’ into the analytics. Said analytics then reports a time on page of 5 seconds for the first pageview.

However, if the user looks at page 1 for 5 minutes, then leaves without loading another page, there is no second time stamp recorded, and so Google Analytics has no idea how long the first pageview was.

The effect is that single page visits and the last page views in a visit do not get counted. This produces two effects:

- Your analytics will show a lot of 0 seconds visits – everyone who reads only one page counts as a 0 seconds pageview.

- The last page visited will have a time on page of 0 – Since there is no interaction after the last page, the time on page will be 0.

Most of the time these cases will be outliers and will not skew your data significantly. However, sometimes these numbers can surprise you. For instance, if you run a blog, quite a lot of users may follow you through RSS. Seeing that you have a new post, they may click “read more” and enter your site at the blog post page. Finishing the post, they close the page and move on. The result is a 0 second bounced visit. Bad, right? Except that they are doing exactly what you want them to do. They did not – in the words of Avinash Kaushik – see, puke, and leave. They engaged.

In both of these cases, how do we count these visits? Counting them as 0 seconds (or bounces) is a little like Lerbergott’s unemployed: they aren’t, really.

Learning from the 30’s and from Time on Page

It’s easy to make assumptions about what a metric is, but in so doing you run the risk of drastically misunderstanding your data. This occurs all the time, from interpretation of 1930’s employment statistics, to modern web analysis. Maintaining focus on the specifics of your metrics is crucial to understanding the actual behavior of your visitors.

1. Robert A. Margo, The Microeconomics of Depression Unemployment, NBER Working Paper no. 18, December 1990.

2. Michael R. Darby, Three-and-a-Half Million U.S. Employees Have Been Mislaid: Or, an Explanation of Unemployment, 1934-1941, Journal of Political Economy 84, no. 1 (February 1976): 1-16.