Article contributed by Bea Dobrzyńska and Paolo Curtoni

There is a rising awareness across the search and SEM industry, still more in literature than in practice, about the value of site search logs, about the knowledge of consumer needs and behavior enclosed within, and that this could be a mean for increasing web sites’ ROI.

Search logs, however, present a set of challenges, that could be summarized in the claim “they contain human language data” – users’ intentions of what they want from a site expressed in their own words: unstructured, unorganized texts written in natural language or multiple languages.

In this article we describe these challenges, analyze the solutions proposed by a research project funded by the European Commission, and anticipate the availability of the results of such a project.

The Challenges Of Search Queries

Search queries are represented by human language fragments. Yes, but which human language? English? German? French? And how can one extract meaning out of them? Languages are rich and humans make full use of their flexibility and wealth. What is most characteristic about human expressions is that one single meaning can be presented in a huge variety of ways. Most analytics systems will list the most frequent queries giving the impression that this is the most important information and do not care much about the quantity and quality of information of the “long tail”. Such analyses may complicate rather than clear the view of the real situation. It has been proven that few queries are identical and popular and that most are not. About 80% of the information remains in the long tail.

Identifying the language of a query is the first step for making sense out of it. Of course the language of the browser and the connection IP address can help, but ultimately language identification must be based on the query itself. “Cane” in “sugar cane” and in “cane da caccia” have quite different meanings, and missing the language context brings to a lack of understanding of the desiderata of the user. Language identification is a relatively easy task with long documents, while it is a completely different issue when it comes to search queries. Standard character based models fail to be accurate enough, typically for cases such as “Richard Wright peinture” where a likely English sequence of characters hides a French query.

The first challenge is represented by advanced language identification, taking into account not only characters but also words (dictionaries) and, in case of ambiguity, heuristic aspects such as the language of the browser or the connection IP address.

The second challenge is represented by normalization. When computing statistics and analytics it is necessary to abstract away from irrelevant variations in word occurrence. For instance one might want to consider the occurrences of “Book”, “BOOK”, “book” and “books” as the same item. The first three occurrences are easy to handle. To understand that “books” is a plural of “book” however is much less trivial especially for languages with rich morphology. Moreover, in languages with written accents (such as French), one must also normalize accents (often omitted); in languages with compounding, one must be able to capture the semantic equivalence of the compounded and non-compounded expressions. Traditional stemming approaches do not provide reliable results in such cases.

On-site search queries often come in great volume. Manual inquiry is not an option considering the data quantity and the fact that manual examination is error prone. Standard analysis tools are not well fitted for dealing with natural language data. The most you can get out of them is a list of keywords ordered by frequency where you will find a huge number of different queries bearing exactly the same meaning expressed in different words. What one would like to know is how many queries target a certain product, which category was of more interest for users looking for content (irrespective of the actual contents offered in that category), whether there were unexpected queries or queries identifying a category which is not offered on the site.

The third, more relevant, challenge is to group search queries based on their meaning or semantics. Such a challenge is so complex, that it might be worth splitting it into a set of sub-challenges, namely:

- Capability of matching (classifying) queries with categories of the site tree (or taxonomy): for instance “recipe”, “preparing a pancake”, “easy pancakes” should be mapped on the same category “recipe”. It goes without saying that the matching strategy depends on the granularity of the categories.

- Capability of extracting and normalizing entities which are relevant for the specific site. For instance in the case of a site offering reproductions of paintings we might want to put together queries containing “Henri Matisse”, “Matisse”, “H. Matisse”, “. Henri-Émile-Benoît Matisse”. Or in the case of a site offering printers we would like to consider as “same product” all occurrences of “Epson MX14”, “MX 14”, “Aculaser MX14”, etc.

- Capability of query clustering. It can be considered as a variant of the matching of queries against the category tree, with the difference that there isn’t any category tree and queries are grouped according to their meaning derived from the queries themselves. It is particularly useful to discover new and unpredicted phenomena such as understanding that visitors of a site are frequently searching for a product that isn’t present in your catalogue but could be added easily.

GALATEAS: Language Technologies for Log Analysis

In March 2010 the European Commission started a 3-year long research project called GALATEAS (http://langlog.galateas.eu/) whose goal is to:

Develop an innovative approach to understanding users’ behavior by analyzing language-based information (user queries) from transaction logs and thus facilitating access to multilingual content.

The objectives of such a project go fully in the direction of fulfilling the presented challenges. The project consortium is indeed a mix of institutions operating in the Natural Language Processing field (CELI Language & Information Technology, University of Trento, University of Amsterdam, Xerox Research Center) integrators (Objet Direct, GONETWORK) and digital libraries experts and users (Bridgeman Art Library, Berlin University). Most deliverables are available to the general public and describe how the challenges have been tackled:

- The language identification is based not only on a character model, but also on a set of heuristics which includes access to dictionaries of mainstream languages, syntactic analysis and consideration of non-query related aspects.

- Full syntactic analysis is used when considering normalization: the project is dealing with languages with both rich morphology (French and Italian) and compounding (German).

- The intelligent kernel of the GALATEAS log analysis system is represented by the language aware components of automatic classification, named entity extraction and clustering. The three components have been realized as a mix of open source technologies (e.g. Mallet and Cluto) and proprietary technologies (available from CELI and XEROX) with the goal of maximizing portability across domains. They all have a module based on machine learning techniques which allow automatic reconfiguration when analyzing logs of different sites.

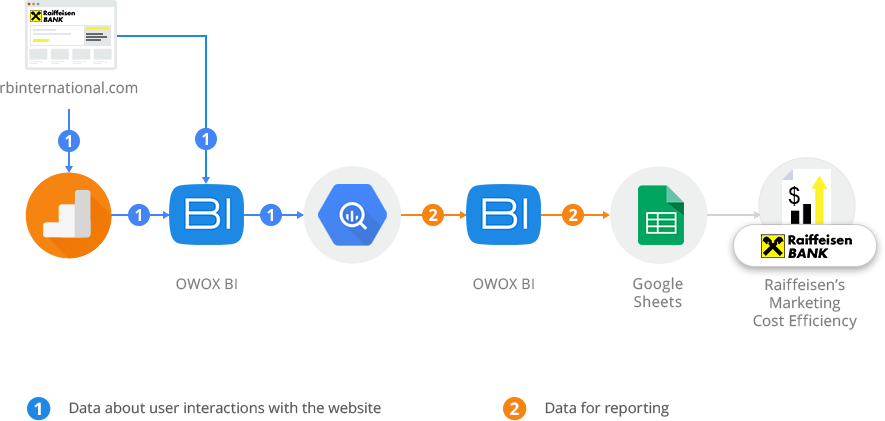

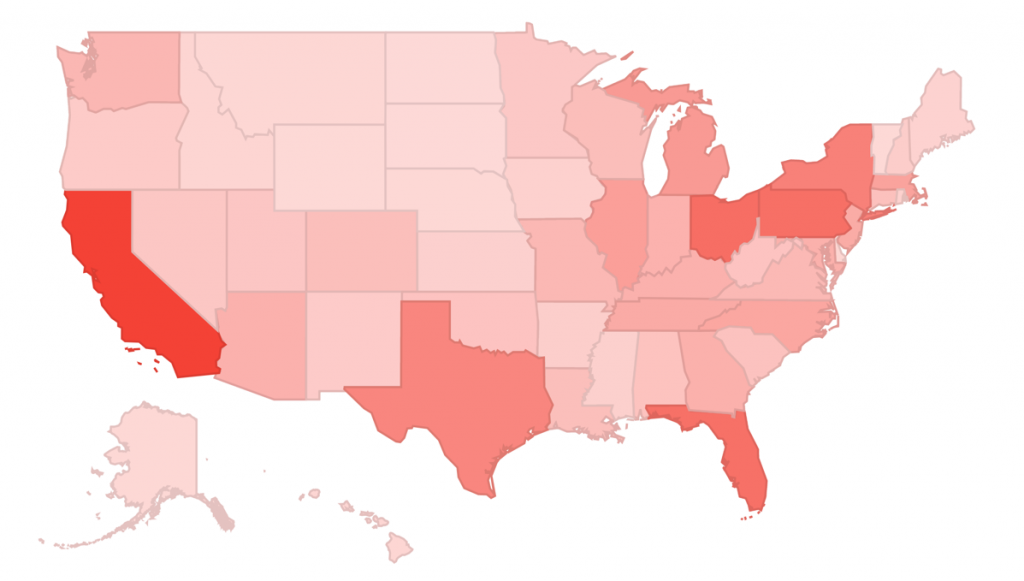

- The semantic part of the system has been integrated with reporting and business intelligence tools such as AWSTATS, Qlik View, Jasper reports etc. Such an integration allows not only easy analysis of massive quantities of searches, but also integration with more structured data such as query time, click through or session information. It allows easy discovery of correlated facts e.g. the fact that queries hitting a certain category always take place during week end or that Pablo Picasso painting are searched mostly by users from Italy.

When Will It Be Available?

Research artifacts usually take some time before becoming marketable. The log analysis subsystem of GALATEAS however was the first milestone of the project, already achieved in May 2011. The consortium is already offering free trials to companies and institutions upon request.

Moreover one of the consortium companies – CELI Language & Information Technology is planning to launch a commercial service of search log analysis under the name Smart Loggent in 2012. It will be interesting to know which functionalities of the GALATEAS system will be part of the commercial release. Given the core competence of the company in semantics, there is no doubt that all necessary components for language analysis and understanding will be there.

Bea Dobrzyńska and Paolo Curtoni work at CELI Language & Information Technology a Natural Language Processing company whose aim is to drive business insight from multilingual unstructured information. CELI’s solution help to uncover the meaning and provide understanding of text information, therefore determine the most strategic response. Research is a strategic strength of CELI allowing the transfer of most recent achievements to commercial applications.