A/B testing sounds like a “no-brainer”. You make two versions of a webpage (A and B), split traffic amongst those versions and choose the version that produces maximum conversions. That’s it! At the core, A/B testing is really as simple as I described. Even if you are doing this kind of simple A/B testing, you are probably far ahead of your competition. But in order to truly boost your conversions, you need to know some of the advanced A/B testing tactics that only experienced practitioners currently use.

Beyond Single Conversion Goal

In a split test, usually you measure performance on a single conversion goal. You put all your trust on a single goal to help you determine the winning variation. But have you ever thought what impact does the winning variation have on other website goals? For example, if you have a website where you directly sell a product and collect email ids of prospective customers by offering a free gift. You decide to increase conversion rate for your mailing list by testing different kinds of free gifts. You get a winning variation which increases mailing list conversion rate by 50%. Should you go ahead and implement that winning variation?

Example of multiple conversion goals

No, not until you get another critical piece of information: what effect did that winning variation have on your sales. It may be very well possible that the variation that increased your mailing list conversion rate actually decreased your sales because visitors got satisfied after receiving their free gift. This is a scenario you must absolutely avoid. So, it is important to measure impact of variations on all your website goals (including sales, bounce rate, signups, etc.)

Recently I stumbled across one case study where multiple conversion goals actually saved a lot of money for a business. They were doing a split test with conversion goal being click on ‘Add to Cart’ button. After a few weeks of testing, they found out that a particular variation increased conversions by 50%. Obviously, they were happy about the result. But when they looked at the actual sales data, they realized that there was a decrease of 20% in final sales from variation. This meant that even though winning variation was great at sending visitors to the final checkout page, it wasn’t so good at motivating them to complete the purchase. Hence a lot of visitors ended up abandoning the purchase after clicking on ‘Add to Cart’ button. If they had simply used the initial results, they may have actually lost a lot of money.

Segmentation: The Magic Tool In Your A/B Testing Toolbox

Not all visitors are alike. Some of them are your customers, some of them are prospective customers and some of them are simply researching about your product. Different kinds of visitors behave in entirely different fashions. Your “winning variation” may have won overall but it may be performing sub-optimal for some visitor segments.

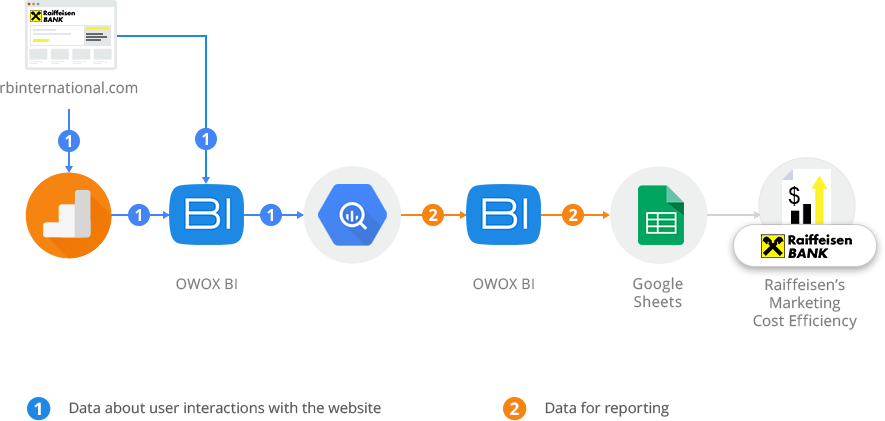

Pre-test segmentation

There are two kinds of segmentation strategies you use in A/B testing. The first one is called pre-test segmentation. Before starting the test you define a visitor segment and run test only for that particular segment. For example, you may want to define a segment of visitors who are actively researching your product. This segment constitutes returning visitors on your website who still haven’t bought your product.

Example of split testing segmentation

So, in pre-test segmentation you define a segment and then run test only for that segment, excluding all other visitors. For example, you run a test on “actively researching” visitors with a variation where your copy is convincing, as opposed to being informative (since “actively researching” visitors already know about your product). This is an interesting type of test because all other segment of visitors (your customers, first time visitors, etc.) will see your default website, while “actively researching” visitors will be included in a test that is just meant for them.

Targeting

The best part of pre-test segmentation is that if you find a winning variation for a segment, you can permanently target that variation to that particular segment. This means that you end up with multiple different versions of your copy optimized specifically for different types of visitor segments. New visitors see a website optimized for them while return visitors see a website optimized for them. The overall boost in sales and conversion rate through this strategy is much, much higher than doing a simple split test for all your visitors.

Targeting has the potential to bring additional revenue to your business without additional effort. As an example, suppose you are selling multiple products on your website. It is very likely that your existing customers come again to your website to read documentation or to contact support. Using cookies or other methods, you can easily detect whether a visitor is your customer or not. Once you know that the visitor is your customer, you could show him banners and homepage designed to up sell and cross sell other products. Humans are shown to engage with personalized messages. So, even a simple banner such as ‘You purchased XXX, why not try YYY to add more value?’ is going to increase your sales.

Example of behavioral targeting on Dell.com

An example of targeting can be seen in the Dell.com screenshots above. Version A is the webpage that all visitors see by default. Version B has a live support link (that isn’t available in version A). Once a visitor has gone through multiple different support pages, version B gets activated automatically. The idea is that if a visitor is browsing through multiple different support pages, it is likely that his query hasn’t been answered. Using live chat, he can easily resolve his issue. Contrast this with a visitor who easily finds the page for his support issue. Showing Live Chat option only to visitors who can’t seem to find a solution themselves saves tremendous costs for Dell.

You can employ a similar strategy on your website too. For example, you can offer a live chat option or an additional discount to all those visitors who have seen your pricing page and browsed many different pages on website. Using latest testing and targeting tools, implementing such a strategy isn’t as difficult as it sounds.

Post-test segmentation

The second type of segmentation strategy in A/B testing is called post-test segmentation. Just like pre-test segmentation, here too you create multiple different segments of visitors (e.g. customers, first-timers, active-researchers, etc.) The only difference is that in post-test segmentation you run test for ALL visitors but later analyze results separately for different segments. (Contrast this to pre-test segmentation where you run the test only for a particular segment)

Overall conversion rate usually leaves a lot of information on the table. Many times, overall losing variation may turn out to be the best variation for a particular small segment of visitors. Hence, it is very important for you to analyze your test results across different kinds of visitors. For example if you are testing free-trial of product v/s a money back guarantee, overall you may see no difference however the winning variation may actually emerge if you analyze results on segment-level. This can be either achieved within your split testing tool (if it has the functionality) and/or you can integrate your testing tool with your web analytics tool to do such segment-specific analysis.

A recent A/B test on Visual Website Optimizer homepage: video v/s text

As an example of post-test segmentation, I recently carried out a split test on my homepage where one variation had a video and one had textual description. I wanted to see if video decreases bounce rate on the homepage. Overall, there wasn’t much difference as only 5% of visitors played the video. But when we analyzed the same results for visitors who signed up for a free trial, we were astonished to see that 44% of visitors who signed up played that video. So, while overall it may seem that the interaction with video wasn’t much but after segmentation it became clear video played a major part in convincing visitors to sign up.

Hopefully, the knowledge I shared in this article was new and useful to you. There are still lots of other interesting advanced testing techniques remaining to be discussed (such as using heatmaps in A/B testing, follow up testing, price testing, pre-testing feedback etc.) The best method to become an A/B split testing ninja is to pick a tool that doesn’t limit your creativity and imagination for improving your sales and conversion rate.

Good luck! If you have any specific questions or feedback, feel free to get in touch with me at [email protected]